- {x}

- Word Break II

- Linked List Cycle

- Linked List Cycle II

- Reorder List

- Binary Tree Preorder Traversal

- Binary Tree Postorder Traversal

- LRU Cache

- Insertion Sort List

- Sort List

- Max Points on a Line

- Evaluate Reverse Polish Notation

- Reverse Words in a String

- Maximum Product Subarray

- Find Minimum in Rotated Sorted Array

- Find Minimum in Rotated Sorted Array II

- Min Stack

- Binary Tree Upside Down

- Read More...

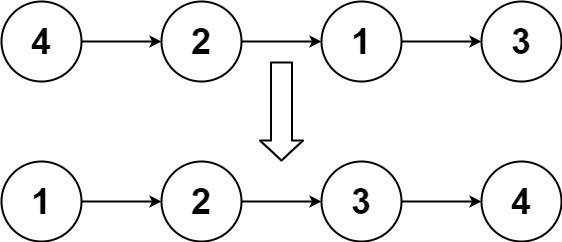

Sort List

Given the head of a linked list, return the list after sorting it in ascending order.

Example 1:

Input: head = [4,2,1,3] Output: [1,2,3,4]

Example 2:

Input: head = [-1,5,3,4,0] Output: [-1,0,3,4,5]

Example 3:

Input: head = [] Output: []

Constraints:

- The number of nodes in the list is in the range

[0, 5 * 104]. -105 <= Node.val <= 105

Follow up: Can you sort the linked list in O(n logn) time and O(1) memory (i.e. constant space)?

148. Sort List

Description

Given the head of a linked list, return the list after sorting it in ascending order.

Solution: Merge Sort

This problem is efficiently solved using a merge sort approach for linked lists. Merge sort is a divide-and-conquer algorithm well-suited for linked lists because it avoids the need for large-scale data movement in memory.

Algorithm:

-

Base Case: If the list is empty or has only one node, it's already sorted, so return it.

-

Find Middle: Use the fast and slow pointer technique to find the middle of the linked list. The slow pointer moves one step at a time, while the fast pointer moves two steps. When the fast pointer reaches the end, the slow pointer will be at the middle.

-

Divide: Split the list into two sublists at the middle.

-

Recurse: Recursively sort the two sublists.

-

Merge: Merge the two sorted sublists into a single sorted list. This involves iterating through both sublists, comparing the values of the current nodes, and adding the smaller node to the result list.

Code (Python):

# Definition for singly-linked list.

# class ListNode:

# def __init__(self, val=0, next=None):

# self.val = val

# self.next = next

class Solution:

def sortList(self, head: Optional[ListNode]) -> Optional[ListNode]:

if not head or not head.next:

return head

slow, fast = head, head.next

while fast and fast.next:

slow = slow.next

fast = fast.next.next

second = slow.next

slow.next = None # Split the list into two halves

left = self.sortList(head)

right = self.sortList(second)

return self.merge(left, right)

def merge(self, l1: Optional[ListNode], l2: Optional[ListNode]) -> Optional[ListNode]:

dummy = ListNode()

tail = dummy

while l1 and l2:

if l1.val < l2.val:

tail.next = l1

l1 = l1.next

else:

tail.next = l2

l2 = l1.next

tail = tail.next

tail.next = l1 or l2 # Attach the remaining nodes

return dummy.next

Time and Space Complexity:

-

Time Complexity: O(n log n). The merge sort algorithm has a time complexity of O(n log n) in the average and worst cases. Each level of recursion divides the list in half, leading to log n levels. The merge operation takes linear time O(n) at each level.

-

Space Complexity: O(log n). The space complexity is determined by the recursive call stack. In the worst case (a completely unbalanced tree, though unlikely with a linked list), the recursion depth is proportional to the logarithm of the input size (log n), which represents the space used by the call stack. This is because we are recursively dividing the list until we have lists of size 1. The merge operation itself doesn't use extra space proportional to the input size.

The code in other languages (Java, C++, Go, TypeScript, Rust, JavaScript, C#) follows a very similar structure, adapting the syntax and data structures accordingly. The core algorithm remains consistent across all implementations.